Nailing website performance

A good habit I’ve got into over the last couple of years is making sure any website I build, or update, performs well. By that I don’t mean SEO, I mean loading times, lag etc.

It largely comes down to good practices:

- Tidy, minimal HTML, CSS and Javascript

- Minify everything!

- Optimise image size/quality

- Reduce requests

- Caching

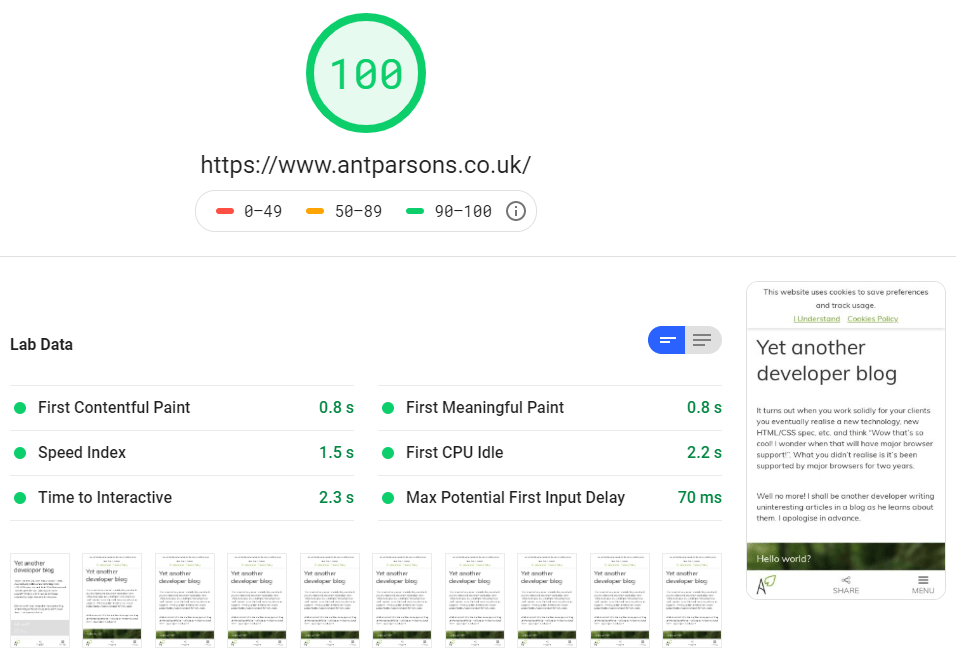

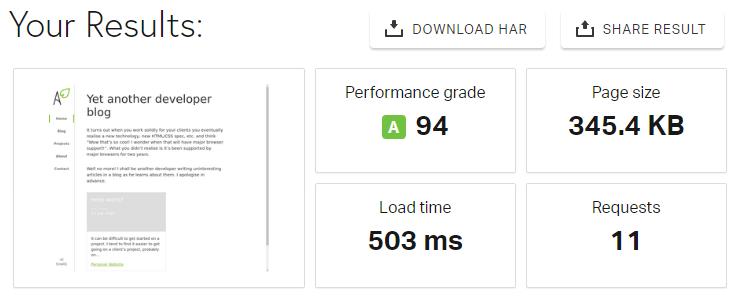

A good way to keep on top of this, is to use Google PageSpeed and Pingdom Website Speed Test.

HTML, CSS and Javascript

It’s quite easy, especially with a larger website, to end up with really convoluted HTML, resulting in CSS files you could scroll through for days. You often end up adding new features and older features stick around, even if you don’t use them! Systems such as SASS and LESS have both helped and hindered in this regard. Hopefully with this website being so simple, this won’t be an issue.

Javascript is often most effective used sparingly. Gone are the days where we require it to do all the transitions and animations we need. Any transitions here are now CSS, just triggered by Javascript controlled events.

Minify!

I must confess it took me a little longer than it should have to start minifying code. But now for old and new websites I make sure CSS and Javascript are minified. A 10kb file might only become 7kb, but it all adds up!

I recently moved to using VS Code, which with plugins has made minifying a default without any additional effort.

The total size of CSS on this website is currently 19kb. Most of this comes from Loophook, a heavily cutdown version of Bootstrap 3, which I intend to ditch in favour of CSS Grid in the near future.

Optimise images

I’ve always tried to be strict with myself and clients to make sure appropriately sized images get onto websites. Unfortunately some clients, tech-savvy or not, often upload massive or miniscule ones. This is partially helped by CMS systems which can automatically resize and crop images, but does not guarantee perfection.

With this website, there's not a huge amount of imagery, so it won’t be a problem. But the images at the top can be quite large on desktop, so the website automatically produces 3 image sizes which, using the srcset attribute, leaves it to the browser to pick the most appropriate for the device. Smaller devices such as smartphones should get the smaller dimension, smaller file size images, while laptops/PCs should get the larger ones.

On top of that, images can be optimised by compression. There are online services such as tinyjpg.com where you can upload an image, to then download a compressed version. I often found this service did an excellent job with the file size, but often left too much artifacting on the image. So I use mozjpeg so I have greater control over the level compression and reduction in quality.

Reduce requests

This is an interesting one (well, probably the least interesting to most people). Almost every time you add a third party plugin or script to your website, you’re adding at least one request to the page. These requests can often add to the loading time of each page they’re on.

You can often find on larger websites, such as e-commerce ones, that with various tracking codes, instant chat, newsletter signups, etc. that a fairly straightforward looking page is making requests to dozens if not more than a hundred files. This can become a nightmare to manage. As a developer you sometimes feel you need to ask the client how much they really need a certain plugin or tracking code, because you know it’s having a notable impact on website performance. But usually you end up having to leave it there.

The homepage on this website will make no more than 14 requests. To keep this low, I’ve embedded CSS and SVGs directly into the HTML of the page, and restricted myself to just one tracking service, Google Analytics. I intend to get this lower still!

Caching

Finally, in this painfully long article, we’re onto caching. It amazes me how many websites still don’t enable caching! This could be because their hosting service doesn’t have any support, it’s an older website which isn’t well maintained, or whoever developed it doesn’t have the knowhow or care to set it up.

Caching basically tells a browser how long to keep a copy of certain files, such as images, CSS/Javascript files, fonts and more. This means that a returning website visitor hopefully doesn’t have to redownload the same files over and over again, which speeds up loading times.

Ideally caching needs to be set to at least a week, if not a month, several months or a year. That is what I’ve done on this website.

But does it all work?

Website performance ultimately has an effect on how well your website performs in search engines, and how easily people can access and navigate around your website. There are many other things to consider rather than just the speed of your website which shouldn’t be neglected, but as a web developer, performance is the finishing touch to getting a website live.

For a new website, it is hard to test how well website performance helps, but for an older website, you may be able to see an increase in traffic and perhaps even sales/enquiries upon doing some optimisations.

For a new website a good start is going to Google PageSpeed and Pingdom Website Speed Test and seeing how well you score…

TL;DR

I’m fairly happy with this website’s performance!